MVRsimulation introduces Joint Fires Training with

Digitally Aided CAS (DACAS)

MVRsimulation introduces a first look at the next-generation of joint fires training: the mixed-reality Deployable Joint Fires Trainer (DJFT) with Digitally Aided CAS (DACAS).

The DJFT supports datalink messaging to execute Link 16 and VMF DACAS in accordance with current joint tactics, techniques, and procedures.

Integrated with the Varjo XR-3 mixed-reality headset, the DJFT contains all equipment required to run dynamic, full-spectrum JTAC/Joint Fires training scenarios, using Battlespace Simulations' Modern Air Combat Environment (MACE) and MVRsimulation's Virtual Reality Scene Generator (VRSG).

With its fully immersive environment, the DJFT is designed to meet the US JFS ESC Memorandum of Agreement accreditation for types 1, 2, 3, day, night, and laser controls.

Video: First look at the DJFT with DACAS

Watch MVRsimulation's Deployable Joint Fires Trainer with Digital CAS in action. In this mixed-reality training environment, the JTAC is fully immersed in the virtual world while also directly interacting with emulated physical SOFLAM and IZLID in the real-world.

Fully immersive joint fires training

The DJFT enables mixed-reality training via integration with the Varjo XR-3 head mounted display. The trainee is fully immersed in the virtual world created by MVRsimulation's VRSG 3D geospecific terrain, while simultaneously interacting with emulated physical target-designating SOFLAM and IZLID in the real world. The XR-3's ultra-low latency, dual 12-megapixel video pass-through camera enables the user to be able to read and write without having to remove the headset, increasing suspension of disbelief and keeping the observer engaged in the scenario.

Portable and self-contained

The DJFT is a modular plug-and-play system comprised of three or more stations fully contained within two-person portable welded aluminum cases.

The baseline system ships with three components: Instructor Operator Station, Role Player Station, and Observer Station. The Instructor Operator Station provides full dynamic control of the scenario and includes a terrain server that holds MVRsimulation’s round-earth VRSG terrain. It can also connect to an external network to support LVC training. Scenarios are run on Battlespace Simulations’ Modern Air Combat Environment (MACE) and MVRsimulation’s VRSG. The 3D graphics are provided by NVIDIA 3090 3D graphics cards.

The system can be configured to meet a unit's specific requirements, via the addition of multiple Observer and Role Player Stations, all networked with a single Instructor Operator Station.

Digitally Aided CAS

MACE supports DACAS IAW as described in JFIRE Appendix E using real world datalink messages to execute both the Link 16/Situational Awareness Data Link (SADL) message flow and the VMF message flow. Using MACE's built in JREAP-C server or the raw VMF gateway, the DJFT can be integrated with live training scenarios.

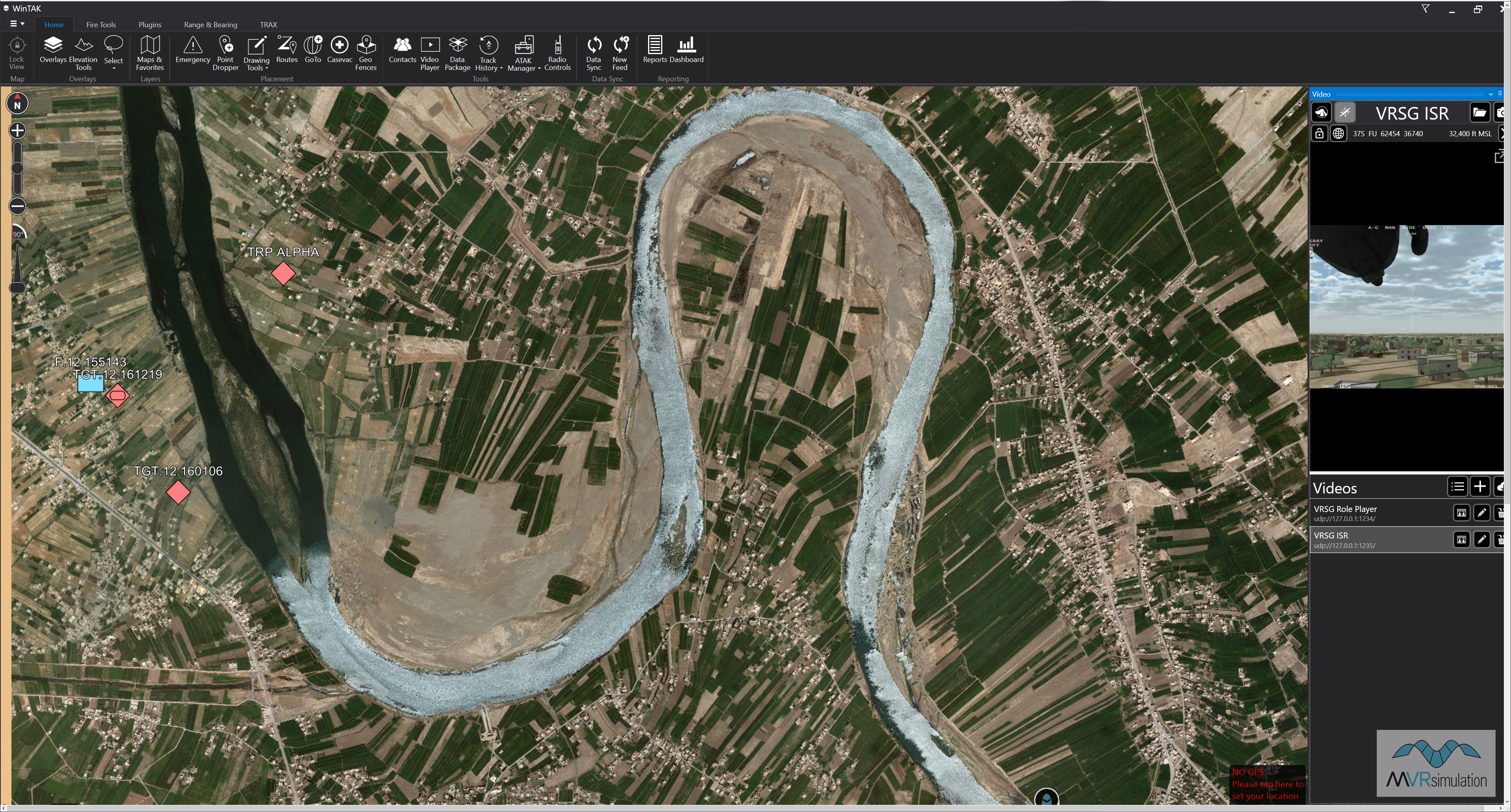

MACE's datalink capabilities, along with VRSG's KLV metadata, permit the seamless integration of the DJFT with real-world targeting software suites including ATAK/WinTAK, and datalink message middleware such as TRAX/ATRAX. The DJFT is ready for integration with a unit's preferred battlefield targeting and communication hardware and software upon formal government approval.

The above image shows a close-up of WinTAK in real time integrated with Battlespace Simulations' MACE and MVRsimulation's 3D geospecific terrain; including simulated sensor feed from the Role Player.

Fully interoperable simulators

The fully-networked DJFT supports real-time interaction with fixed-wing, rotary-wing, and RPAS assets, including MVRsimulation’s fixed-wing Part Task Mission Trainer. The PTMT pilot can join the scenario as an additional Role Player. Also equipped with a mixed-reality headset, the PTMT role player is immersed in the training scenario via the Thrustmaster HOTAS and Battlespace Simulations' MACE software.

Eye-tracking enabled

Using the integrated Varjo XR-3 mixed-reality headset, the wearer’s eye gaze can be tracked, recorded and reviewed for informed after-action debrief. During the scenario, VRSG tracks the Observer's head position and orientation, tracks the gaze vector using the Varjo device’s pupil tracking functionality, and then depicts the gaze of each eye independently as a color-coded 3D cone. Eye-track data is exported via DIS and can be saved to a PDU log for later review. This feature is available in VRSG version 7.

This electronic newsletter may be redistributed without restriction in any format as long as the contents are unaltered. Previous issues of MVRsimulation News can be found at https://www.mvrsimulation.com/aboutus/newsarchive.html.

Copyright © 2022, MVRsimulation Inc.

MVRsimulation Inc.

57 Union Ave.Sudbury, MA 01776 USA

MVRsimulation, the MVRsimulation logo, and VRSG (Virtual Reality Scene Generator) are registered trademarks, and the phrase "geospecific simulation with game quality graphics" is a trademark of MVRsimulation Inc. All other brands and names are property of their respective owners. MVRsimulation's round-earth VRSG terrain architecture is protected by US Patent 7,425,952.

To unsubscribe from MVRsimulation News, send an email message to subscription-service@mvrsimulation.com indicating "Unsubscribe from Newsletter" on the subject line.

Please view our privacy policy at https://www.mvrsimulation.com/privacypolicy.html.

This newsletter is in full compliance with the enrolled (final) text of bill S.877, which became law on January 1, 2004, and which allows the sender to transmit unsolicited commercial electronic mail so long as the message contains an opt-out mechanism, a functioning return email address, and the legitimate physical address of the mailer.